This page describes a proprietary system to transfer data between servers over encrypted channels, either on a schedule or triggered by file activity, which I built while employed as a Linux system administrator and Linux architect.

| Key details | |

|---|---|

| Brief description: | A system for point-to-point encrypted data transfer channels, each of which automatically synchronises files from source to destination when its trigger conditions are met. |

| Consumer: | Data integration team, ERP development and support team, Financial controls team, Linux system administration team |

| Impact to consumer: |

|

| Technical features: |

|

| Technologies used: | C, OpenSSL, rsync, Apache HTTP Server, HTML::Mason (Perl), MariaDB |

Many corporate processes involve data being processed on one server and then transferred to another. A wide range of transfer mechanisms are in use, such as FTP, SFTP, SCP, and rsync. All of these involve security tradeoffs and some, like FTP, can be quite complex and fragile.

To reduce the support overhead and the general attack surface, I extended the general idea of rsync-over-SSH transfers into the server-to-server transfer manager. This uses an agent at both ends of the transfer, with a central server to manage the overall configuration and receive a copy of transfer logs for operators to review under a unified interface. Dozens of critical internal data flows now rely on this system.

Transfers are triggered either on a schedule (like a crontab), or by an external process creating or removing marker files. The following marker file types are applicable to transfer channels:

- Transfer trigger marker - for triggered transfers, rather than scheduled transfers, this is a file that an external process would create to signal that it has finished creating the data to transfer; the agent will remove this file and then initiate the transfer.

- Block marker - if the block marker is present, the channel will delay transmissions until the block marker is removed. For example, the destination side could have a batch process create a block marker while processing newly received data, so nothing more comes in until the batch process has completed.

- Activity marker - the agent can create a file while a transfer is in progress and remove it on completion, as a signal to surrounding processes (so, for example, a batch process may hold off producing anything until a previous transfer is complete).

- Completion marker - the agent can update the timestamp of a file on completion of a transfer, so that monitoring systems can check whether things are proceeding normally, and dependent processes can decide whether they have any new work to do.

- Failure marker - the agent can create a file on transfer failure, and remove it on success, so that monitoring systems and other processes can take appropriate action.

- Silence marker - the agent can create a file if no data has been transferred for a configurable amount of time, to signal to monitoring systems or other processes that there has been an unexpected lack of data to process.

The central server polls each agent continuously, transmitting any configuration changes, and retrieving any new transfer logs. Problems are presented in the web interface, and also exported as metrics files for the Zabbix monitoring system to detect and raise alerts from.

Each agent listens for connections from the central server and from other agents. The OpenSSL libraries are used to provide encryption and certificate validation. Agents perform the network I/O in a dedicated, unprivileged subprocess, for security isolation.

When an agent runs a transfer, it communicates its intent to the agent at

the other end over the encrypted connection, and both sides then launch the

rsync tool in subprocess with the UID, GID, and configuration

file appropriate to the channel configuration. The progress is recorded in

the transfer logs and the exit status captured to indicate whether the

transfer was successful.

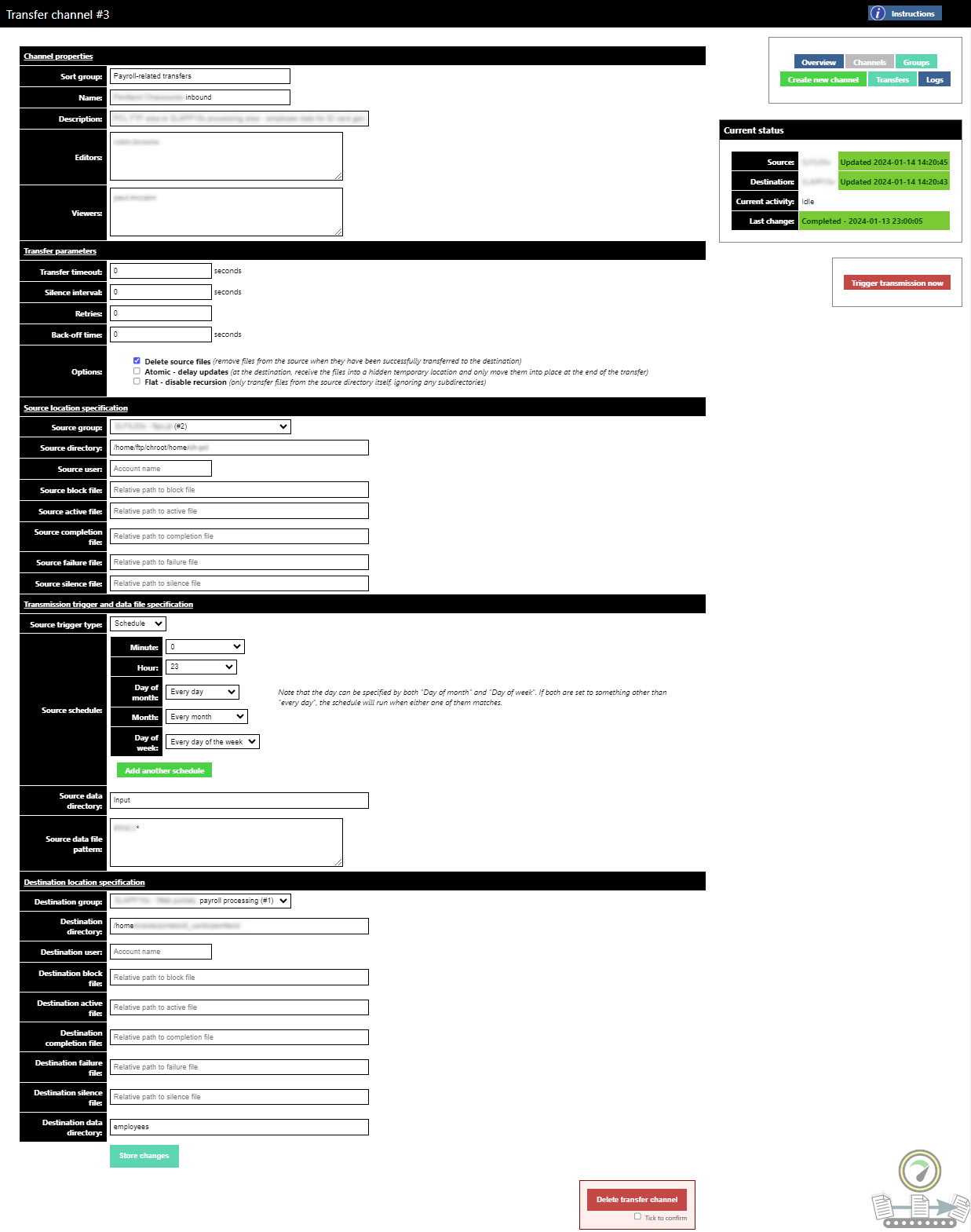

The figure above shows the configuration page of a server-to-server transfer channel in the management tool. The current status of the channel is shown at the top right; this is also reflected in the monitoring system via the exported metrics files.

The tool allows channel configuration to be delegated, by listing Active Directory users or groups in the Editors and Viewers boxes. The Viewers access is read-only, allowing those people to see the status and logs only.

Source and destination servers are not selected directly in the channel configuration, but instead refer to "server groups". In practice most server groups contain only one server; but in the case where services are provided by an active/passive server pair, the server group mechanism allows the transfer channel to always point to the currently active node.

Some of the rsync transfer options are surfaced in the

Options section, as different teams use this transfer mechanism in

different ways. Similarly, the glob patterns that rsync should

match when transferring are also configurable here.